How do we design good small-scale research questions?

And how do we avoid wasting those precious respondents that are often not easy to recruit?

Philip MorganI got a good question about this research note: "How do you structure the representation (as data) and presentation (as a web form or series of sub-questions?) of a good question (like your #1) to ensure that your recruiting effort pays off maximally? In other words, 'I hesitate to give this question as is and leave a large blank for a participant to fill out, because they may misunderstand parts or skips parts entirely'".

Short version:

- Because when we start a small-scale research (SSR) initiative we don't know what we don't know, we often intentionally structure our question "badly" and "wastefully" in order to learn how to better structure it.

- We combine this intentionally wasteful approach with a frugal approach to the first round of recruiting so we don't waste our entire pool of potential respondents on a poorly-designed data collection method.

In a way, my answer boils down to: you can't avoid screwing up, so limit the damage and learn iteratively. Here's what I mean by that.

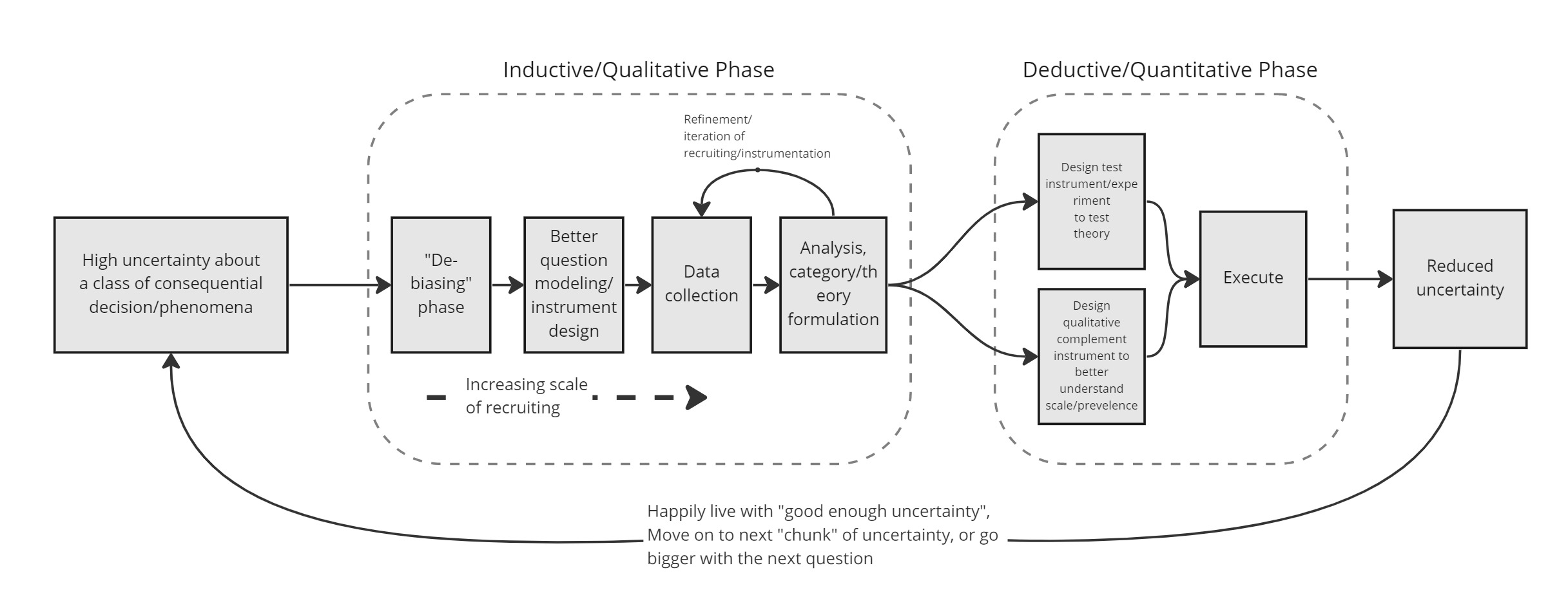

First, let's look at the entire small-scale research (SSR) process in stylized form:

Often (but not always) what causes the uncertainty we seek to reduce with SSR is:

- We lack a good theory/model that explains how and why X happens

- We labor under false beliefs/assumptions about how/why X happens

- We don't understand the system-level interactions that impinge upon X

This means that if we formulate a research question about X, that question -- even if it's appropriately scoped for SSR -- might be conceived or phrased in a way that is biased (usual disclaimer: I'm talking about bias in the research context here, which means anything that causes a problematic distortion in your research results).

That's why we need what I used to call a "de-biasing" step to the first inductive/qualitative phase of our research. During this step, we do exactly what you (rightly) identify as a bad practice, which is to ask pretty broad questions and -- if we're using a survey instead of interviews which we often are because of the efficiency of surveys -- let respondents fill in open-ended, paragraph-length, free-text fields.

As you mention, respondents may "misunderstand parts or skips parts entirely" and if they do that, we have learned something valuable; maybe the question wasn't clear, maybe it wasn't relevant, or maybe something else is wrong with it. But a pattern of skipping or misunderstanding across multiple responses teaches us something we could not otherwise see. It might be a somewhat trivial issue with the question phrasing, or it might be a more fundamental problem with how we've conceived the question itself. As you might expect, you recruit a small sample for the de-biasing step.

As to sample size specifics, consider the following:

- “If you know almost nothing, almost anything will tell you something.” --Douglas Hubbard (I love this quote's brevity, but if I was to expand it and add context that is in line with Hubbard's thesis I would say: If you know almost nothing, almost any observation or measurement that is relevant to your uncertainty will tell you something.)

- This review of the sample sizes needed to achieve saturation indicates:

- "We confirmed qualitative studies can reach saturation at relatively small sample sizes.

- Results show 9–17 interviews or 4–8 focus group discussions reached saturation.

- Most studies had a relatively homogenous study population and narrowly defined objectives."

In my ongoing research into custom software buyer behavior and preferences, it was at first not apparent to me that there are more than 1 category of buyer. Now, in hindsight, it's dazzlingly obvious. But there's something about how we can get fixated on a particular configuration of the research question and have trouble going beyond the edges of it as we've defined it. If you had asked me back then "are there more than 1 category of buyer of custom software development services" I would have readily said "of course!" and moved on to think about what those categories might be. But things didn't go that way, and it had to become apparent to me while analyzing my first batch of survey responses that, oh... look at this... some of these people seem to approach the task of finding a developer very casually and they stop the search as soon as they've found the first decent option and... huh... that doesn't really square with what I've heard about rigorous procurement processes and tough negotiations and approved vendor lists and on and on. This led to some deeper thinking about the research and the new idea that there are two categories of buyers: professional buyers who use more rigor and process in their buying behavior and non-professional buyers who use less rigor in their search for a satisficing solution. For me, this happened during the feedback loop labeled "Refinement/iteration of recruiting/instrumentation" rather than during the block labeled "Better question modeling/instrument design", but the idea is the same. We don't know what we don't know, and the grounded theory process that the first phase of SSR is loosely based on accommodates this kind of fluidity and iteration in the research method.

If you find this stuff interesting, pages 233 - 235 of this document do a nice job of describing how the fluid, subjective, generative nature of qualitative research fits into the greater rigor demanded by pre-registration.

Once we've moved past the "don't know what we don't know" part of the process, the question of how to design effective questions re-surfaces, because now we're going to try to assemble a larger sample, and so we don't want to waste that recruiting effort. Here's what's helped me with that:

- If you have the time for it, put your list of questions away and stop thinking about it for a while (a week, at least). Then come back to it with an ability to see what's really there more objectively and clearly.

- Look over your questions for any usages of metaphor, analogy, jargon, or local idiom. These are all places where a group of people from varied backgrounds could get tripped up by your question phrasing (see what I did there? :troll:).

- Get a few friends/colleagues to review your questions with a very critical eye.

- Look for opportunities to reduce friction and effort without reducing the quality of the data your questions can gather.

- For example, if you've achieved saturation on some part of your larger research question, you can replace open-ended text fields with multiple-choice fields in order to reduce friction.

Finally, treat your research participants as if every single one of them was going to donate some bone marrow to save your only child. Treat them like gold. Ways you can do this:

- Estimate the time required to participate in your survey/interview with high accuracy

- Thank them genuinely and profusely

- Follow through on any promises you make them

- Treat the data they share with you 100% in accordance with your promises

- Don't bury anything in fine print

I hope this was a useful response to your question!

Did I leave any of y'all with further questions? If so, let me know.

-P